EAIDB 1H2023 Report

State of the ethical AI ecosystem.

Introduction

The Ethical AI Database (EAIDB) is a curated collection of startups that are either actively trying to solve problems that AI and data have created or are building methods to unite AI and society in a safe and responsible manner.

EAIDB is now over a year old! We started in May 2022 with a mission to provide awareness and visibility of the ethical AI ecosystem through systematic categorization and updates. Over the last six months, a lot has happened - particularly in the generative AI space. Companies in EAIDB have had to contend with new technology and some have even found a market in it.

There has been a decline in the number of new responsible AI enablement firms founded each year, but the products coming out are far more deliberate (i.e., this is no longer hype-driven). We continue to expand our coverage of the ecosystem and have been working closely with partners who share our vision.

View our latest market map here.

This report is a conglomeration of insights we have gained throughout the first half of 2023. We cover market movements within many of our categories, highlights for the timeframe, and more. EAIDB publishes reports and market maps on a semiannual basis.

Additions & Deletions

We've made a number of additions and deletions to the ecosystem. We're constantly validating and reviewing our member companies, and these past six months have been busier than ever. We welcome nearly 70 new companies and firms to the ethical AI ecosystem. We're now covering over 250 responsible AI enablement companies across all major geographic regions. Welcome to EAIDB!

We've also more strictly enforced our criteria for being part of the database. We want to reiterate that we only include early-stage startups (founded post-2015, Series C and earlier) whose primary mission is aligned with one of our categories. While there is a lot of good work being done by more mature companies in the space (like Microsoft, Amazon, H2O, etc.), their primary mission is elsewhere. Our insights will always include a nod to what these bigger players are up to. We have also identified some companies in EAIDB that we feel no longer fit our criteria upon closer inspection. Some are inactive, and some we feel do not directly relate to responsible AI or its many subcategories. As we continue to refine our criteria, EAIDB will always be transparent in the changes made to its map and constituents. The following companies were modified (either removed or changed):

- Just Insure has a parent company called SF Insuretech that focuses on fair insurance. SF Insuretech has been included in the database.

- Parity AI has rebranded as Vera.

- Deepvail and Kymera Labs appear to be inactive.

- Aplisay, Owl.co, FutureAnalytica, Cuelebre, Rooster Insurance, Pendella, Layke Analytics, and Netacea have primary missions that are only indirectly related to responsible AI enablement.

- Several open source frameworks in the database were outdated and undermaintained. We remove EML XAI, Pymetrics Audit AI, FairLens, and Oracle Skater

- Some companies in the database were too mature. We remove Eightfold AI and Flock Safety, both Series E companies, as well as BlendScore (founded 2014).

- Adalan AI has been moved from consulting to AI GRC as they are actively developing a SaaS product.

1H2023 Highlights

Generative AI

The first half of this year was all about generative AI. The market has benefited greatly from the increased skepticism that providers (particularly OpenAI) have received regarding security, sourcing, environmental cost, toxic outputs, and much more. The truth is, LLMs are the perfect storm of everything wrong with the machine learning process. In response, almost every major provider along the ML value chain has adapted their services to account for some aspect of these downsides (or, at the least, to support LLM development).

Some of the quicker startups in EAIDB to do the same have benefited from the hype. This comes on the heels of the EU AI Act as well. There is a lot of movement in this ecosystem due to all of these factors.

We at EAIDB think that, while GenAI is incredibly powerful, it seems reminiscent of Web3 and Crypto in terms of where it is in the hype cycle that seems to power every discussion on the internet. To put the hype in perspective, estimates of generative AI's growth rate are still extremely small compared to other, far more usable and controlled technologies, like causal AI or federated learning (granted, the total market size for these latter technologies is much, much smaller).

Global Demand

Demand is increasing, steadily. The EU will be the first to pass an all-encompassing AI Act that will surely do for the responsible AI market what GDPR did for privacy. Other companies like China, India, and Australia are following suit as AI rapidly approaches maturity.

There is still limited incentive for businesses that are not in highly regulated environments because there is no demonstrated or tangible value of responsible AI enablement. This is changing, however, in part due to generative AI. Generative AI has shown most people in technology what the true impact of concepts like hallucination, misinformation, toxicity, illegally acquired or biased data, and lack of visibility is. Businesses want to harness the power of this technology without subjecting themselves to the aforementioned additional risks. This takes some level of investment - whether it is a build or buy decision. Large enterprises will most likely build their own solutions, whereas medium-to-large enterprises may choose to buy. Smaller organizations globally are still identifying their pain points.

Funding Patterns

There were a lot of new funding rounds raised in 1H2023. The VC market has cooled substantially since 2021 due to limited capital availability and surprising financial events like SVB's collapse. The hype around GenAI hasn't actually helped dealmaking, either. It is quite clear that most of the market does not really understand GenAI companies and their technology.

Interestingly, the number of funding rounds within EAIDB has stayed relatively on pace with 2022. The total rounds raised in the first half of 2023 is comparable to last year's despite these headwinds.

Open Source Proliferation

In a loud cry for transparency, there are quite a few startups in EAIDB that offer open source repositories with a “freemium” model: come for the framework and the packages, stay and pay for the scale. HuggingFace did this brilliantly by first establishing a community for open-source models and methods relating to LLMs and adjacent technology, then providing scale via strategic partnerships with Amazon AWS and Microsoft Azure. We've seen more of these come out of the woodwork recently, some of them (like Deepchecks, Giskard AI) even raising rounds to continue their mission. It's an interesting business model that leverages the power of crowdsourcing (a formidable force, given that even Google and OpenAI admit their real competition is the open source community!).

Funding Activity

33 responsible AI enablement startups raised funding in 1H2023.

| Company | Amount (millions USD) | Round | Lead Investors |

| Trustible | 1.6 | Pre-Seed | Harlem Capital Ventures |

| Brighter AI | Undisclosed | Undisclosed | Deutsche Bahn Digital Ventures |

| Preamble | Undisclosed | Series A | Undisclosed |

| Cohere | 270 | Series C | Inovia Capital |

| Bria | 10 | Series A | Entree Capital |

| Giskard AI | Undisclosed | Grant | EIC Accelerator |

| Truata | 0.05 | Grant | PETs Prize Challenges |

| Troj AI | Undisclosed | Grant | Google for Startups Canada |

| Deeploy | 2.7 | Seed | Shaping Impact Group |

| Kanarys | 5.0 | Series A | Seyen Capital |

| Monitaur | 4.6 | Seed | Cultivation Capital |

| Fairly AI | 1.7 | Seed | Flying Fish Partners, Backstage Capital, X Factor Ventures |

| Deepchecks | 14.0 | Seed | Alpha Wave Ventures |

| Ethical Intelligence Associates | Undisclosed | Grant | Scotland Start-Up Awards |

| Equalture | 3.2 | Undisclosed | Shoe Investments |

| Hazy | 9.0 | Series A | Conviction VC |

| Humanly.io | 6.0 | Convertible Note | Undisclosed |

| Stratyfy | 10.0 | Undisclosed | Truist Ventures, Zeal Capital Partners |

| Clearbox AI | Undisclosed | Grant | Undisclosed |

| Syntheticus | Undisclosed | Non-Equity Assistance | Undisclosed |

| Fiddler AI | Undisclosed | Undisclosed | Alteryx Ventures, Mozilla Ventures |

| Trust Lab | 15.0 | Series A | Foundation Capital, US Ventures |

| Betterdata | 1.7 | Seed | Investible |

| Gretel | 0.8 | Undisclosed | Enzo Ventures, Extension Fund, Inveready |

| Cranium AI | 7.0 | Seed | KPMG, SYN Ventures |

| MeVitae | 0.6 | Grant | InnovateUK |

| Calypso AI | 23.0 | Series A | Paladin Capital Group |

| FedML | 11.5 | Seed | Camford |

| Flower Labs | 0.5 | Pre-Seed | Y Combinator |

| Lelapa AI | 2.4 | Seed | Mozilla VC, Atlantica Ventures |

| Onetrust | 50 | Undisclosed | Undisclosed |

| Themis AI | 1.4 | Undisclosed | Undisclosed |

| X0PA AI | 1.4 | Debt Financing | Undisclosed |

Acquisitions & Exits

There was one major exit in the first half of 2023. The acquired company, Superwise, specialized in model observability and monitoring. This is one of the only major acquisitions within the model monitoring space, and comes on the heel of a very active 2022 M&A year for responsible enablement. In 2022, HR firms Talenya and Headstart were acquired along with content moderation startup Oterlu AI and data privacy startup Statice GmbH.

Blattner Technologies acq. Superwise

Blattner Technologies, a digital transformation and predictive analytics provider, acquired Superwise.ai to add to their portfolio of AI-related offerings and services.

Read PRCompany Highlights

Through the first half of this year, some companies stood out against the backdrop with their innovative solutions. We'll be closely tracking their progress as the space evolves. EAIDB has no motive in promoting these companies other than to promote their missions and products.

Global Copyright Exchange (GCX)

As generative AI grows with unprecedented pace, the only constant is the increasing value of clean, relevant data. GCX is a platform and data provider that has been collecting original music content and is licensing their data out to those that want to leverage it for model training. The data they've collected is sourced ethically and copyright cleared and even includes methodically documented metadata on each piece (instruments used, vocal characteristics, etc.). Especially with the additional catalyst of viral AI-generated music on platforms like Instagram and TikTok, companies looking to create their own musical applications or songs will need high-quality, properly sourced datasets.

Fiddler AI

It is difficult to find a company that has had more milestones in the first six months of this year than Fiddler. Fiddler raised funding not once, not twice, but three times in four months. Alteryx Ventures, Dentsu Ventures, and Mozilla VC have all put their faith in the responsible MLOps company. Dentsu's investment is interesting in particular because it enables Fiddler to pursue a growth strategy in Southeast Asia, where countries are rapidly engaging with AI (China, India, and Singapore are some of the more active). They've also released Fiddler Auditor and other LLMOps tools meant to monitor LLMs and generative AI models in production.

Lelapa AI

Lelapa AI is the only firm in EAIDB headquartered in Africa. The South African company raised funding from Mozilla VC earlier this year to continue their misson of delivering high-quality, responsible AI-based solutions in various areas. Their first product, Vulavula, is an NLP-as-a-service focusing on underrepresented languages. They are one of the firms contributing to Africa's mindset around AI and how to harness its power responsibly. In a world where AI efforts are concentrated in the Western hemisphere, Lelapa is a critical and necessary component of the AI community and their work continues to drive impact.

Category Trends

Each category in EAIDB represents a different "type" of ethical AI service and therefore display very different dynamics over the course of a year. Below are some highlighted trends that seem to be driving the industry forward.

For more information on how we define our categories, visit our methodology.

Data for AI

Good data is more important than ever.

Within the Data for AI space, the big theme (intertwined of course with generative AI) is data sourcing and labeling. Not only are LLMs data-hungry, they also perform proportionally with data quality: for organizations building their own LLMs, data quantity is often better than data quantity because they allow for smaller, more lightweight models that still perform exceptionally well. In addition, representative data that has been cleared for copyright purposes and purged of toxicity is what is required to build models that can surpass OpenAI's in terms of usefulness.

We've learned a lot from OpenAI's less than ideal methods - from paying Kenyan workers $2/hour for toxicity labeling to copyright infringement, the GPT series is a reiteration of some of the worst aspects of machine learning and artificial intelligence. The importance of responsibility in these instances cannot be overstated.

In the sourcing space, labelers like Snorkel AI are accompanied by more innovative approaches such as StageZero (assembling global language datasets through mobile games for more representative and performant audio/voice algorithms) and GCX (a source of music and audio content that has been cleared for use in algorithms). The value of data itself has skyrocketed because the modeling problem has been solved.

MLOps and ModelOps

MLOps companies take advantage of confusion around Large Language Models (LLMs).

Much of this report covers the generative AI hype and the response across the ecosystem. The MLOps and ModelOps companies in EAIDB have largely reacted positively and have adapted or built aspects of LLMOps to capture some of the use cases in language modeling. There is a great opportunity here simply because the number of new tools, models, papers, and ideas around the topic has skyrocketed in such a way that contrasting various approaches is near impossible.

However, we expect that these solutions will not dominate the market going forward. Just as traditional machine learning turned from an art to a science with low-code platforms, AutoML, and the entire “model and platform builders” space, so too will language modeling become a science. In fact, this has already begun to take place with new research and platforms like MosaicML (acq. by Databricks). There is also some new research on "AutoGPT" (think of a self-training, self-finetuning GPT variant). Read the paper here.

In addition, Fiddler was recently funded by Dentsu Ventures to scale their business in Japan and Southeast Asia. This is one of the first instances of international recognition of a responsible AI startup through a funding round, and Fiddler certainly leads the pack in momentum.

AI GRC

AI GRC startups face threats from all over the ML value chain, but the leading products are clearly differentiated.

AI GRC products cover everything from organization-wide transparency to holistic legal and regulatory compliance to model value and risk assessment. As the sort of "catch-all" at the end of a machine learning pipeline, AI GRC products face consistent competition from literally all sides. One of the most prevalent trends here are the sheer number of companies from other primary business lines revealing new GRC products to build on their existing customer base and provide their users with a more all-encompassing view of their model lifecycles.

Ultimately, time will tell who the winners in this space are, though some companies like Monitaur and Credo have pulled ahead due to their positioning and holistic reach over the machine learning lifecycle.

Another interesting trend is the absence of vertical AI GRC products. Of the 32 AI GRC companies in EAIDB, only two are more vertical-aligned. There are a few companies that focus on the full array of use cases for a particular vertical, such as FairNow for HR and Monitaur for insurance, but it seems relatively rare to encounter companies like these. This may be because global demand is still low and in order to capture a wider client base, most AI GRC companies prefer to be horizontal. There is certainly space for vertical solutions that are specific to the nuances and use cases present in highly regulated industries.

Some companies are choosing to remain horizontal but are focusing more on the language modeling and generative AI side - Preamble, Deepbrainz AI, and Titaniam are examples.

Model and Platform Builders

HR model-builders are dying out as other horizontal-oriented companies take over. Finance and healthcare are rapidly scaling.

Within the model and platform builders, we have startups that expressly develop and sell their algorithms or proprietary technology to clients. Examples include toxic content moderation services (e.g., Modulate, Trust Lab) and HR algorithms (e.g., Kanarys, Pave).

Human Resources (HR)

In this report, we focus on two verticals: HR and finance. The decline of new HR-specific startups and algorithms has been repeatedly noted in EAIDB's previous reports and continues into the first half of 2023. Most notably, the rate of new startups from 2015-2020 was about five per year.There has not been a single such company on EAIDB's radar targeting the same space since 2020.

This may simply be because HR teams are implementing their own ML - as barriers to this technology continue to decrease, the build vs. buy decision leans increasingly towards “build.” Newer EAIDB startups in the HR space target GRC or other high-level, post-implementation use cases.

Lastly, as the more mature vertical in EAIDB, this space has also seen its fair share of exits thus far. Four acquisitions (Happyr, Praice, Headstart, Talenya) and one liquidation (Ceretai) have given us a glimpse at what consolidation might look like for other verticals.

Financial Services

Finance is an almost opposite story. There has not been much momentum in the finance vertical for responsible AI enablement just yet, but there are certainly relevant catalysts that are pushing the industry towards adopting better AI. From the Consumer Financial Protection Bureau's (CFPB) report to the US Congress on fair lending in 2022, there were 174 institutions cited for fair lending-related violations coupled with an increase of 146% in fair lending examinations and targeted reviews from 2020-2022. The CFPB has also historically referred to “digital marketing fairness” and redlining as other adjacent concerns. This market is wide open with only a few strong players such as FairPlay AI, Evispot, and Stratyfy. Building this technology in a financial context requires an exceptional technical discipline as well as the ability to demonstrate value (a recurring theme within the responsible AI ecosystem).

Alternative Machine Learning

Causal and federated AI are growing at faster rates than GenAI.

On the Alternative ML side, there are really three frameworks that stand out against the backdrop of centralized ML: causal AI, federated learning, and neurosymbolic AI. We won't cover neurosymbolic AI in this report because UMNAI is really the only startup spearheading this effort.

Causal AI

It is important to establish that, according to some estimates, causal AI is growing at a much faster rate than generative AI. This is potentially because of its essentially unlimited use cases in healthcare and other experiment-driven fields where generative AI is just not as useful. There are discussions, however, of uniting the two - why not give generative AI models the ability to make causal inference? This may not even be so far away. A paper released earlier this year covers exactly this topic.

Within the causal AI market, EAIDB has recognized CausaLens as one of the only fully horizontal causal AI platforms focused on delivering high-fidelity and explainable causal AI capabilities to businesses.

Federated Learning

Still a growing market, federated learning solves problems associated with high data transfer costs and sensitive data usage by allowing machine learning algorithms to be constructed in a distributed fashion such that data never leaves its home.

Products like Integrate AI and Flower Labs are bringing federated learning to businesses with their platforms. However, there is also additional interest in Large Language Models (LLM) trained in a federated environment. Companies like DynamoFL and FedML attempt to provide this functionality to alleviate some of the privacy concerns with language modeling. This is an early and very specific market that depends on both technical details (such as increased computational complexity) and federated learning adoption trends.

AI Security

AI Security is fast becoming a VC-favorite product type.

The newest category addition to EAIDB, AI Security consists of “security for AI,” not “AI for security.” Startups in this area address some of the more dangerous aspects of models in production. This category also comprises the greatest amount of current investor attention because it is the most approachable and understandable from a business value perspective.

Companies in this space offer model testing solutions (like adversarial testing, inversion testing, penetration testing, prompt injection, etc.) and sometimes couple them with dashboards or model inventories (akin to some products in the GRC space). Those that provide a more holistic view of an organization's model attack surface (Protect AI, Cranium AI, etc.) target CISOs or other C-level executives. Others have strong proprietary technology meant to thoroughly test and secure AI pipelines (Adversa AI, Robust Intelligence, etc.).

NightDragon released an insightful post recently that broke down their perspective of this budding market. Though their list of displayed companies and funding information is somewhat incomplete, it does provide a holistic look at what they describe as "a new frontier of AI innovation." Astoundingly, every one of the AI Security companies in EAIDB has raised funding already.

Firms like Unusual Ventures have recently voiced their interest in the space, while others like Paladin Capital Ventures, Flying Fish Partners, and more have made active investments already.

Open Source

The rise of freemium business models and generative AI moves open source forward.

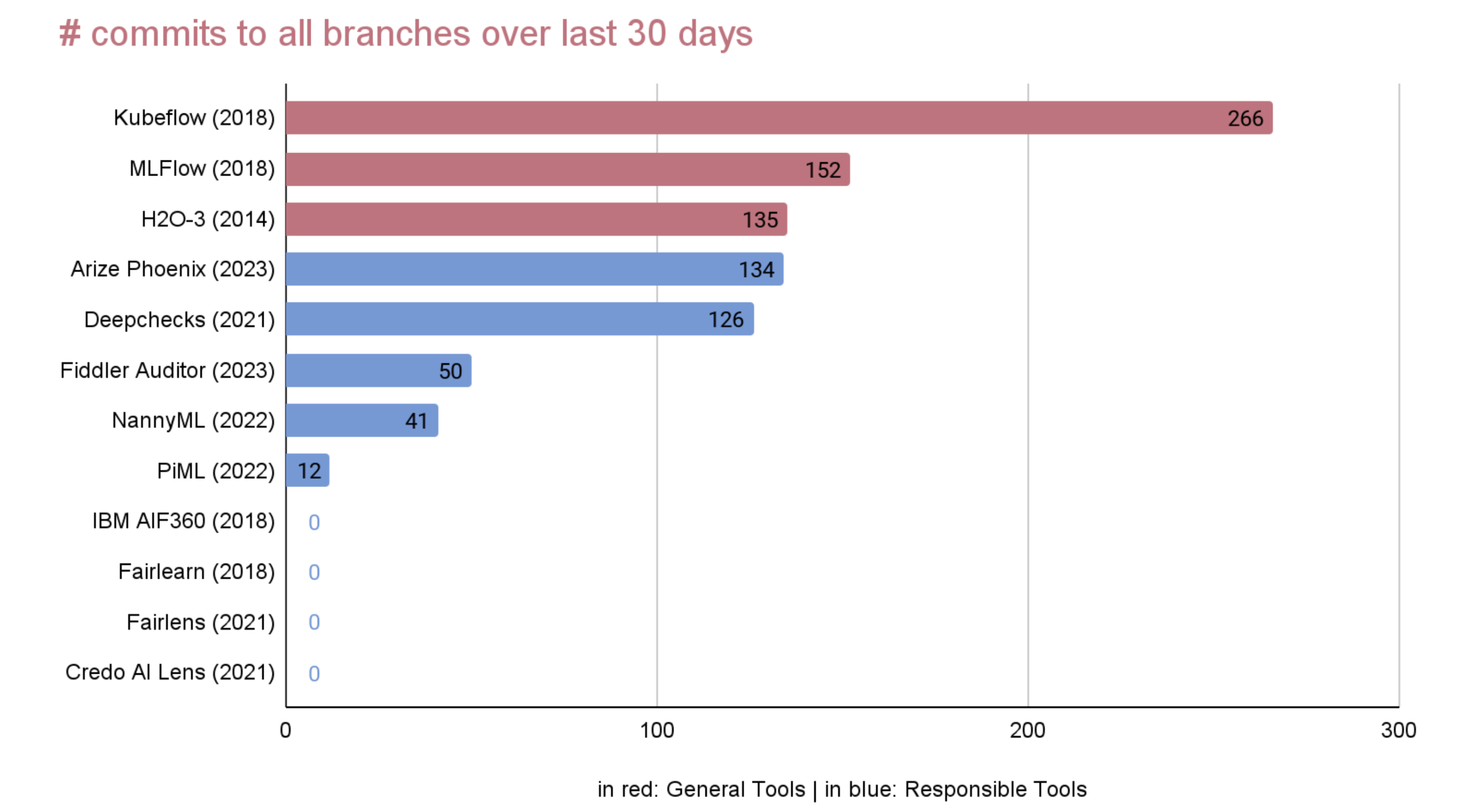

Continuing our theme from the end of 2022, we find that there are still large gaps in the open source domain for responsible enablement. The two most abundant types of libraries in this space revolve around MLOps and explainability. There are a few libraries for fairness, bias mitigation, etc., but these are undermaintained and understaffed relative to some of the more operational repositories.

Recently, however, there has been a large influx of open source tools released (both by companies in the MLOps space and by independent organizations) for LLM maintenance, development, and validation. Nearly every major responsible MLOps company in EAIDB (Arize Phoenix, Fiddler Auditor, TruEra TruLens, etc.) released packages to assist with LLMOps. In addition, several entities have created open source libraries for regulating LLM behavior and security - Microsoft Guidance, Microsoft Counterfit, Rebuff AI, Guardrails AI are all examples.

One notable event from this year is Mozilla VC's recent investment into Fiddler AI. Mozilla's commitment to open source principles and vision of supplying powerful tools to developers at low costs certainly motivated their entry into the space: their funding round preceded Fiddler's launch of their open-source Fiddler Auditor.

Outside of LLMs and generative AI, there is still a limited set of tools for processes like compliance and governance. For small-to-medium enterprises (SMEs), the price tag on larger solutions is steep. Some companies, like KomplyAI, aim to provide these capabilities at a lower cost.

Another interesting trend is the rise of freemium models within EAIDB companies. This type of business model is similar to HuggingFace's - provide an open source library for free but charge for scale and additional features/tools. HuggingFace democratizes large models and provides tooling to implement them, but charges for scale via inference endpoints, CPUs/GPUs, AutoTrain, etc. Examples of this are Flower Labs, Giskard AI, Themis AI, and Deepchecks. All of these companies were founded post-2018 with the majority founded post-2020.

EAIDB Value Add

Our goal is to drive value for our universe of startups and do as much as is possible to support them on their journey towards widespread responsible AI enablement. Here are some ways we've been able to do that so far:

- Lead & Sales Sourcing

- Investment Sourcing

- Marketing and Promotion

- Market Research

We've heard from several of our constituent companies that EAIDB's transparency and ability to filter, search, and compare companies within the same solution space has helped clients find products that match their needs.

EAIDB has drawn attention from VC firms and founders alike and have exercised our unique ability to make connections between the two parties on more than one occasion.

EAIDB has over 2,100 followers on LinkedIn and has received over 3,000 downloads on our various reports. We attract attention from the public, policymakers, founders, and investors alike.

As a fully independent organization, EAIDB sits in a place of objectivity and methodical approaches. We do market research on behalf of governments and organizations to investigate and identify market opportunities, profile companies, and offer in-depth comparisons of technology used.

EAIDB Partnerships & Initiatives

EAIDB has historically worked with institutions who perpetuate knowledge and awareness of the ethical AI ecosystem and why it is so critical in today's automated world.

EAIDB and EAIGG partnered on a video panel series covering three categories from EAIDB's prior classification titles. These videos can be found on EAIGG's LinkedIn.

EAIDB and Nordic Innovation collaborated on a "Nordic Ethical AI Map" (viewable here) to increase awareness of organizations that are actively working to improve the way AI is built. Nordic Innovation is responsible for fostering cross-border trade and innovation in the Nordic region.

EAIDB and the Montreal AI Ethics Institute (MAIEI) collaborated on an ethical AI series covering each of the categories in the database. MAIEI regularly publishes content related to AI ethics.

About EAIDB

The Ethical AI Database is a live database of curated startups attempting to solve some of the most damaging aspects of AI / ML in society. We offer semiannual market map updates and reports, but periodically release content through various media channels. For more on how we curate our database, view our methodology. To submit your company to our list, fill out the submission form. If you'd like to work with us, you can reach out here. Thank you to the Ethical AI Governance Group (EAIGG) for assisting us with our mission.

Disclaimer: logos were taken from LinkedIn company profiles or were found via search engines, but belong to their respective firms.